Usage Modes

All GDC users can execute jobs on Euler under the umbrella of the GDC share. A big advantage is the fact that the usage behaviour of many users balances out, as not all users have to execute orders at the same time. Therefore, the CPU load of a single user can be higher for short periods of time. As a rule of thumb, the fewer jobs you run, the more CPUs you can use at any given time.

If you use too many resources, your priority may be lowered and your jobs will not start. If your priority is low, we cannot increase it and you have to wait until your priority is restored. Depending on the situation on the cluster and our share you are not be able to use constantly 200 CPUs and 1 Tb memory over weeks.

If you are using > 60 CPUs and/or > 300G of total memory in tota in total, the average usage should be > 75%. This is often difficult to achieve because jobs are often not completely independent and can slow each other down, for example when reading the same files.

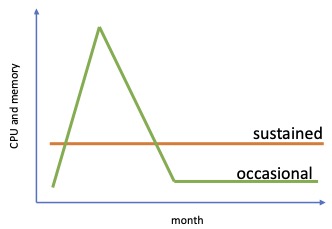

We thus recommend the following two usage profiles and recommend to limit the number of jobs that you run in parallel by using arrays or job chaining.

Sustained usage

-

24 CPUs and 120 G memory

-

Long jobs

-

Average CPU, memory or time efficiency < 50%

You are running jobs almost constantly for a longer time using many CPUs or a lot of memory. Because jobs have a long run-time or because you are regularly starting new jobs or jobs have an average efficency (memory, CPU and time) of less than 50%. Entire piplines (wrapper) like DeepARG, metaWARA, Qiime, ATLAS-Pipeline and R workflows such as dada2 would also belong to this category.

Occasional usage

-

60 CPUs and 300 G memory

-

Average CPU, memory, time efficiency > 50%

Most of our users fall into this category. You are running jobs now and then. Average CPU, memory and time the mean efficiency is > 50%.