This section examines common challenges and limitations that can affect scientific integrity and research reliability, including statistical errors, biases in data, analytical flaws, and ethical considerations related to data handling. It also explores the distinction between correlation and causation, highlighting how incorrect assumptions can lead to misleading conclusions. Recognizing these potential pitfalls is essential for conducting rigorous and transparent research."

Beyond bar plots: A clearer view with boxplots and violin plots

Bar charts can be misleading when presenting data because they only show summary statistics such as means, masking variability, skewness and outliers. This oversimplification can distort interpretation, especially when distributions are non-normal. In contrast, box plots - especially when overlaid with individual data points - reveal key distribution features, including medians, quartiles and potential outliers. Violin plots go a step further by visualising the full probability density, making it easier to spot multimodal distributions and asymmetries. By replacing bar plots with these richer visualisations, we ensure a more accurate, transparent and informative representation of the data.

Barplots hide the truth—boxplots and violin plots spill the tea!

The Perils of Correlation: When patterns mislead

Correlation is often mistaken for causation, leading to false conclusions in science and everyday reasoning. Just because two variables are statistically related does not mean that one causes the other. Spurious correlations - where unrelated factors appear to be related - can be misleading, and even statistically significant correlations may have little meaning if they are weak. A humorous example of this can be found on Tyler Vigen's Spurious Correlations website, which presents absurd but statistically significant relationships, such as the correlation between cheese consumption and deaths from tangled bedsheets. Without careful analysis and proper controls, correlation-based claims can distort reality and reinforce biases rather than reveal true causal relationships. Recognising these pitfalls is essential for sound scientific reasoning and decision making.

Correlation does not imply causation!

The Illusion of Precision: Numbers without context

Numbers are often used to lend credibility to weak or unsubstantiated arguments, but without proper accuracy and context, they can be downright misleading. Quantities can be miscounted, measurement procedures can be flawed, and assumptions can go unexamined, all of which can result in numbers that falsely support a flimsy claim. Relying on these numbers without questioning their origin or validity can lead to false conclusions, reinforcing shaky arguments with an illusion of precision. Always question the numbers behind the claim - accuracy and proper methodology are essential for true credibility.

Data don’t lie, but they sure love to stretch the truth!

Context Over Digits: The danger of misleading numbers

Beyond simply questioning numbers, it’s crucial to recognize how their presentation can distort reality. Numbers may seem objective and precise, but without context, they can be misleading or even meaningless. A figure presented with excessive decimal places or without relevant background information can create a false sense of accuracy, making data appear more reliable than it actually is. This can lead to poor decision-making and misinterpretation.

For example, when measuring DNA concentration with a NanoDrop spectrophotometer, reporting a value of 53.2749 ng/µL may appear highly precise. However, given the instrument's inherent variability and sensitivity to contaminants like RNA or protein, such precision is misleading. In reality, a rounded value (e.g., 53 ng/µL) is more appropriate and acknowledges the limitations of the measurement. True precision comes from understanding the accuracy of the method, not just reporting more decimal places.

More decimal places don’t make a number true—just fancier nonsense!

No Magic in the Black Box: Bad Data Leads to Bad Science

No matter how sophisticated an analysis is, its conclusions are only as good as the data on which it is based. This principle, often summarised as 'Garbage In, Garbage Out' (GIGO), highlights the fundamental truth that faulty, biased or poor quality input data will inevitably lead to unreliable or misleading results - no matter how advanced the methods applied to it.

Even the most powerful statistical models, machine learning algorithms or bioinformatics pipelines cannot fix fundamental problems in the data. If sequencing reads are contaminated, metadata is incorrect, or measurements are inconsistent, the results will be equally flawed. The illusion of rigour created by complex methods can sometimes mask these underlying problems, leading to false confidence in erroneous conclusions.

You can't plant weeds and expect roses.

The Self-Correcting Nature of Science: Why Any Paper Can Be Wrong

Science is a self-correcting process in which any scientific work can be wrong. Scientific knowledge evolves as new data, methods and perspectives emerge, so conclusions are always subject to revision. While peer review is an important filter, it is not infallible, and errors - whether due to statistical error, misinterpretation or biological variability - are common. Some papers may even be retracted due to fraud or irreproducibility. But this openness to challenge is science's greatest strength. By constantly testing, questioning and refining ideas, science advances and ensures that our understanding of the world becomes more accurate over time.

Science refines through trial and quest, where every idea may face a test.

Scientific Literature Bias: The disappearance of negative results

A well-known bias in scientific research is that positive results are more likely to be published, while negative or inconclusive results are often 'filed away'. This publication bias can distort the scientific literature, giving the misleading impression that certain treatments or hypotheses are more successful than they actually are. This is a major problem for meta-studies, which rely on the existing literature to draw conclusions. If a meta-study is based mainly on positive results, it can lead to overestimated effects and misinformed conclusions, potentially misguiding future research or policy. It's vital that the scientific community recognises and addresses this bias to ensure more accurate and comprehensive evidence synthesis.

Positive results get the spotlight; negative ones are backstage, waiting for their chance!

The "Savior Complex" in Science: It's all about sharing

Many scientists, driven by passion, sometimes present themselves as the sole saviors of the world—curing diseases or solving global issues on their own. This mindset forgets that science is about collaboration, sharing knowledge, and building on collective efforts. When researchers focus more on self-promotion than on advancing shared goals, it can hinder progress. True scientific advancement comes from openness, teamwork, and mutual respect, not individual glory.

Science is a team sport, not a solo mission to save the world!

Fancy Terms, Simple Tools: AI, Machine Learning & Deep Learning Are Not Magic

In today's world, terms like artificial intelligence (AI), machine learning (ML) and deep learning (DL) are often used to impress and inflate the perceived value of analytics. While these technologies are powerful, they are not inherently revolutionary in their predictive capabilities. They are simply advanced methods - some dating back decades - now supercharged by modern computing power and massive data sets.

The basics of predictive modelling, such as linear regression, decision trees and neural networks, have been used in medicine, finance and business since the 1950s. What's changed is not the existence of AI, but its scale, speed and accessibility.

AI, machine learning and deep learning are not magic. They don't automatically deliver better results just because they sound impressive. Instead, they offer flexible, data-driven approaches - but only if applied correctly with the right data and context. Remember, big data can lead to big mistakes, and high-dimensional data can lead to overfitting, where the model fits noise rather than true patterns. These methods are meant to enhance decision making, not replace it.

By understanding these technologies as evolutions of long-standing statistical methods, we can appreciate their true value - without getting lost in the hype.

Don't flex their tech—wow them with your science!

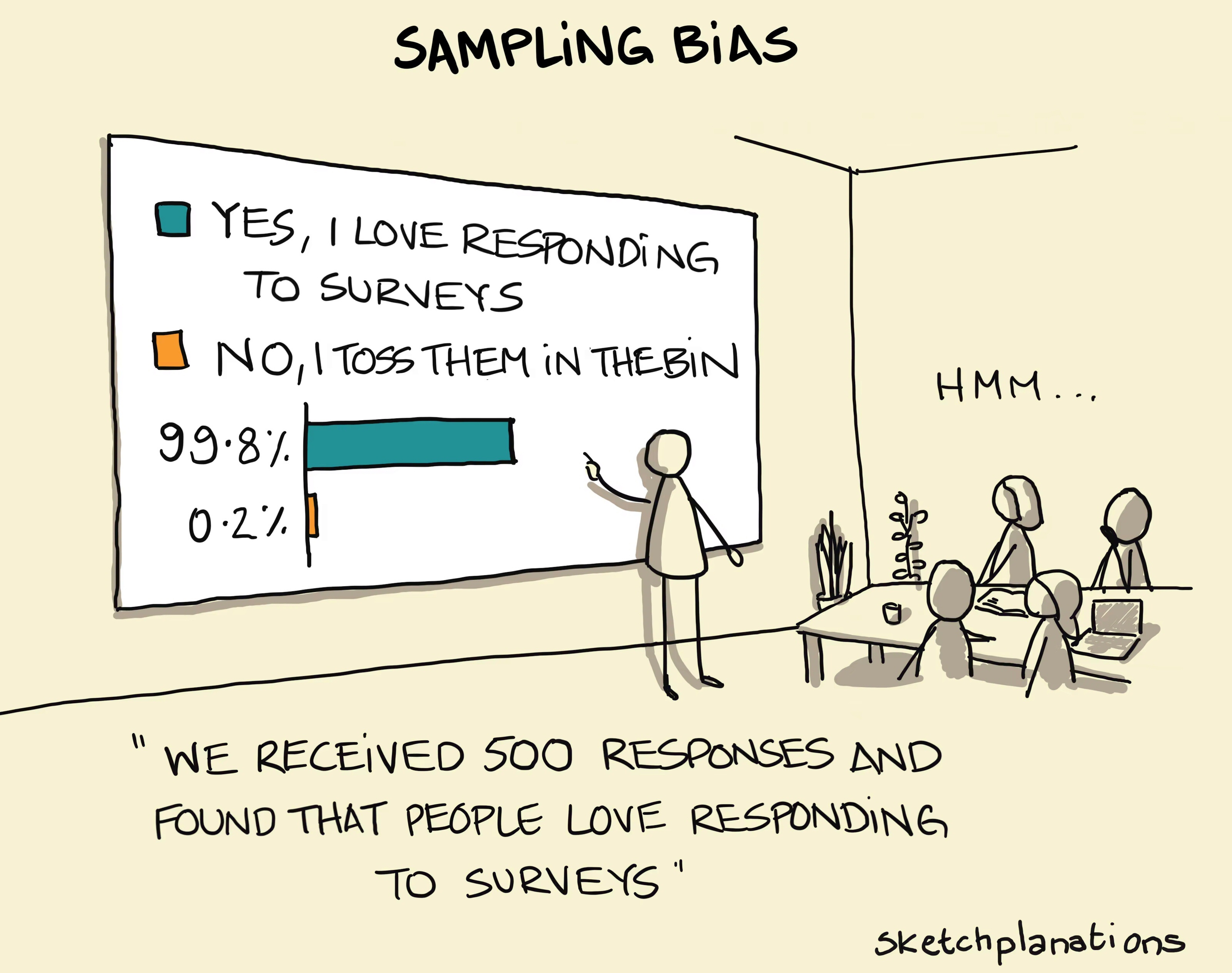

Sampling Bias: Off to a bad start

Sampling bias occurs when the sample selected for a study does not accurately represent the wider population, leading to biased or misleading results. In biological research, this can seriously affect conclusions about a species or ecosystem. For example, if a study of the health of a population of daphnids only collects healthy individuals because the sick ones are outside the sampling zone, the results will overestimate the overall health of the population. This bias can lead to incorrect conclusions, such as underestimating the effects of environmental stressors or overlooking critical health issues. Proper, random sampling is essential to obtain reliable and representative data.

Don’t write a review of a book by reading just the first chapter!