Learning Objectives

◇ Master basic Bash commands and gain an understanding of more advanced command functionality.

◇ Learn how to redirect command output and create log files for tracking processes.

◇ Develop the ability to locate and effectively use built-in help and documentation for terminal commands.

🎯 Who Is This Tutorial For?

This tutorial is designed for students and researchers who are new to the Linux terminal, with no previous experience required. Whether you're completely new to command line interfaces or have only used graphical tools, this guide will take you through the basics step by step. If you already have some experience with Linux, you will still find useful tips and best practices throughout.

Info

No programming or system administration background is needed. Just curiosity and a willingness to try things out!

Introduction to This Manual

A few notes before you begin:

Code snippets: Code snippets (chunks) are displayed in grey boxes. To copy the content directly to your clipboard, click the page icon (⎘) in the top right corner of each snippet.

Comments in code: Any text following a hash (#), also known as a hash, pound or number sign, is a comment and will not be executed as code. The number of # doesn't affect its functionality, so use it to explain or document your code. Comments also help improve the readability and structure of your code (see below).

Home Sweet Home

Your home directory is your personal space on the system. It’s where you store your own files, run scripts, and organize data. When you open a terminal, you usually start here by default.

Each user on a Linux system has their own home directory—this helps keep files separated and secure.

echo ${HOME} # Shows the path to your home directory

pwd # Displays the current directory path - it should be your home directory

If you ever get lost, don't worry! You can always find your way back to your home directory, here's how:

cd ${HOME} # Navigates to your home directory

cd ~ # Another way to go to your home directory using the tilde

cd # Simply typing `cd` with no arguments also takes you home

Permissions and Safety

You own everything in your home directory. This means you can read, write and execute your own files. Other users cannot usually access your files unless you explicitly allow them to. This system of permissions protects your data and prevents accidental changes by others.

Organization Best Practices

Because you'll be working with a lot of files, especially when analysing data, it's a good habit to keep things organised:

~/data

~/scripts

~/results

Avoid cluttering up your home directory with unorganised files - it quickly becomes messy and hard to navigate.

Permissions Matter (Optional)

In Linux, every file and folder has permissions that say who can read it, write to it, or run it. You can see these by typing ls -l. For example:

-rw-r--r-- 1 user group Jan 1 17:06 myfile.txt

That string at the beginning (-rw-r--r--) shows who can do what: the owner, the group, and everyone else.

If you want to change permissions, the chmod command lets you do that. For example:

chmod +x ~/scripts/myscript.sh # gives execute permission

See also Command Line Syntax in Terminal Basics.

Explaining Linux File Permission Numbers

Linux file permissions can be represented in a 3-digit. Each digit controls access for:

- First digit → Owner (User)

- Second digit → Group

- Third digit → Others (Everyone else)

Each digit is the sum of permission values:

| Permission | Symbol | Value |

|---|---|---|

| read | r |

4 |

| write | w |

2 |

| execute | x |

1 |

Example: 755

7 (Owner) = 4 + 2 + 1 = rwx → full access

5 (Group) = 4 + 0 + 1 = r-x → read + execute

5 (Others) = 4 + 0 + 1 = r-x → read + execute

Remember: Each permission digit is a sum: 4 for read, 2 for write, 1 for execute. Just add what you want the user/group/others to be able to do.

Creating and Navigating Directories

In Linux, the file system is structured like a tree, starting from the root directory (/) and branching out into different directories, including your home directory. From there you can move between directories, access files and manage your resources. Understanding how to create directories and navigate between them is key to organising your work and moving around your environment efficiently.

Let's practice creating directories and moving between them using these essential commands:

mkdir- make (create) directorcd- change directorypwd- print the current working directory (where you are in the file system)

Create a Directory in Your Home

To get started, let's create a new directory in your home directory:

cd ${HOME} # Make sure you're in your home directory

mkdir terminal # Creates a folder named 'terminal' in your home directory

mkdir terminal/workDIR # Creates a subfolder named 'workDIR' in your terminal directory

mkdir terminal/workDIR/subDIR # Creates a subfolder named 'subDIR' inside 'workDIR'

You can also create multiple levels of directories in one command:

mkdir -p ${HOME}/terminal/workDIR2/subDIR2 # The '-p' flag ensures the entire path is created if it doesn't exist

Navigating to a Directory

Now, let's move into the newly created directories:

cd terminal # Move to the 'terminal' folder

cd workDIR # Move to 'workDIR' inside 'terminal'

cd subDIR # Move to 'subDIR' inside 'workDIR'

You can also jump directly to a specific directory without stepping through each one:

cd ${HOME}/terminal/workDIR2/subDIR2 # Jump straight to 'subDIR2'

pwd # Use 'pwd' to check your current location

Directory Structure

Here’s a visual representation of your directory structure:

Tree: Path:

HOME ${HOME} # Your home directory

└── Terminal ${HOME}/terminal # Folder inside your home

└── workDir ${HOME}/terminal/workDir # Folder inside 'terminal'

| └── subDIR ${HOME}/terminal/workDir/subDIR # Folder inside 'workDir'

└── workDir2 ${HOME}/terminal/workDir2 # Another folder inside 'terminal'

└── subDIR2 ${HOME}/terminal/workDir2/subDIR2 # Folder inside 'workDir2'

Is it possible to create multiple directories in one go?

Absolutely! With the terminal, almost anything is possible.

mkdir ${HOME}/terminal/{dir01,dir2,dir3}

This command creates three directories (dir1, dir2, and dir3) all at once inside your terminal folder.

Move out of a Directory

With cd, you can not only navigate into directories, but also move back up to parent directories just as easily.

Step-by-Step Navigation: Let's move from a subdirectory back to its parent directories one step at a time:

cd ${HOME}/terminal/workDIR/subDIR # Move into 'subDIR'

pwd # Verify your current location

cd .. # Move up one directory to 'workDIR1'

cd .. # Move up another level to 'terminal'

pwd # Check where you are now

All in One Go: You can also move up multiple levels in one command:

cd ${HOME}/terminal/workDIR/subDIR # Move into 'subDIR'

cd ../.. # Go up two levels at once

pwd # Confirm you're back in 'terminal'

By using .., we move up one directory level at a time. By chaining (e.g., ../..), you can quickly jump multiple levels.

Removing Folders

Attempting to Remove a Directory with Subfolders:

If you try to remove a directory that contains subfolders, it won't work:

rmdir ${HOME}/terminal/workDIR

- ✘ This won't work because

workDIRcontains subfolders. - ☛ Note:

rmdironly deletes empty directories. If the directory has any files or subdirectories, it cannot be removed.

Removing Subfolders Step-by-Step

To remove subdirectories first and then the parent directory, follow these steps:

rmdir ${HOME}/terminal/workDIR/subDIR # Removes subDIR

rmdir ${HOME}/terminal/workDIR/ # Removes workDIR

ls -l ${HOME}/terminal # Verify if the deletion worked

Ready to Level Up? Here are some challenges designed to give you hands-on experience and deepen your understanding.

❖ Challenge #1: Delete folder workDir and everything inside.

Insight for Challenge #1

rmdir ${HOME}/terminal/workDIR2/subDIR2

rmdir ${HOME}/terminal/workDIR2/

You cannot use rmdir to delete a folder that is not empty. The rmdir command in Linux is designed to remove only empty directories. If the directory contains files or subdirectories, rmdir will fail.

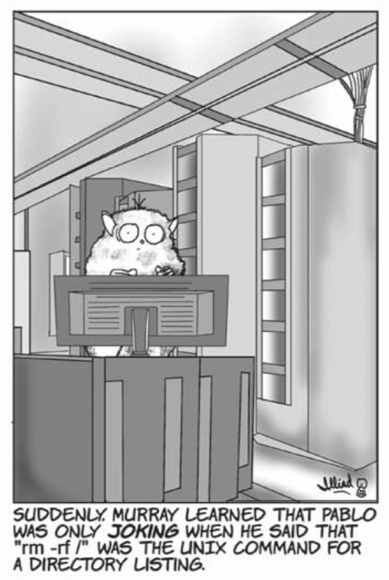

To remove a directory that contains files or other directories, you can use the rm command with the -r (recursive) option:

rm -r ${HOME}/terminal/workDIR2/

❖ Challenge #2: Do the following:

- Create two directories (Run240415 and Run240412) in your working directory (

${HOME}/terminal/). - Create a subdirectory (infoA and infoB) for each directory.

- Switch between the two subdirectories.

Insight for Challenge #2

## Define working directory

wd="${HOME}/terminal"

## Define user variables for directories

pathA="${wd}/Run240415/infoA/"

pathB="${wd}/Run240412/infoB/"

## Create both directories

cd ${wd}

mkdir -p ${pathA} ${pathB}

## Move around

cd ${pathA} # go to the first directory

pwd

cd ${pathB}

pwd

❖ Challenge #3: The rmdir command only removes empty folders. Can you find an alternative way to remove the folder and all the subfolders at once? A Tip: Use the rm (remove) command and check the manual page for help (man rm).

Insight for Challenge #3

We can use the remove rm command with the recursive -r option.

Be careful with the force -f option - there is no undo and gone is gone!

rm -ri ${HOME}/terminal/Run240415 ${HOME}/terminal/Run240412

# ➜ Option -r, -R, --recursive: remove directories and their contents recursively

# ➜ Option -i: safety belt (note: the order of the parameter matters)

There are alternatives way to delete the two folders:

mkdir -p ${HOME}/terminal/Run240415/infoA/ ${HOME}/terminal/workDIR2/Run240412/infoB/

rm -fr ${HOME}/terminal/Run24041[25]/

# ➜ Option -f, --force: ignore nonexistent files and arguments, never prompt

❖ Challenge #4: Does it matter if you use capital letters in file or folder names? For example, is test.txt the same as TEST.TXT, or FOLDER_A the same as folder_a? Task: Find a way to test if the terminal you are working with is case-sensitive or not.

Insight for Challenge #4

Some file systems are not case sensitive. It is important to know if TEST and test are the same on your computer.

## Test Files

touch file.txt

touch FILE.TXT

ls -l

echo "TEST" >> FILE.TXT

cat file.txt

rm FILE.TXT

# file.txt == FILE.TXT ➜ Terminal is not case sensitive

## Test Folder

mkdir TEST

mkdir test

# mkdir: TEST: File exists ➜ Terminal is not case sensitive

In Linux, the terminal is case-sensitive, meaning commands, file names, and directories must be typed with the correct capitalization.

In macOS (Apple's Terminal), the terminal is case-sensitive by default, but this can vary depending on the file system. macOS uses a case-insensitive file system (HFS+ or APFS), so file names may not respect case in Finder but will in the terminal.

In Windows, the terminal (Command Prompt or PowerShell) is case-insensitive, meaning commands and file names can be typed in any case. However, the Windows Subsystem for Linux (WSL) behaves case-sensitively, like Linux.

Data Streams

Data streams are fundamental concepts in Linux, representing the flow of data through the system. Understanding these streams allows you to control how your programs interact with data, making your command line operations more efficient and powerful.

Every command (or programme) we run from the command line interacts with three streams of data. These streams can be modified in various ways to produce useful and interesting results.

Data Streams

STDIN(0) - Standard input (data received by the command)STDOUT(1) - Standard output (by default, printed to the terminal)STDERR(2) - Standard error (by default, printed to the terminal)

Redirect Output (overwrite)

By default, STDOUT and STDERR are printed to the terminal. However, we can redirect these streams to files, which is particularly useful for saving output or error messages.

## Print a message to the terminal

echo "Hello Terminal" # This prints the message to the terminal

icho "Hello Terminal" # Typo, does not work and prints an error to the terminal

## Redirect `STDOUT` and `STDERR` to separate files

echo "Hello Terminal" 1> text1.txt 2> errors.txt

# `1>` redirects standard output (STDOUT) to text1.txt

# `2>` redirects standard error (STDERR) to errors.txt

more text1.txt # You can also use `less` or `cat` instead of `more`

more errors.txt # This will be empty because there was no error

## Redirect `STDOUT` and `STDERR` to separate files

icho "Hello Terminal #1" 1> text1.txt 2> errors.txt

cat text1.txt # This will be empty because of the typo in the command

cat errors.txt # This will contain the error message

## Redirect both `STDOUT` and `STDERR` to the same file

echo "Hello Terminal #2" > text2.txt 2>&1

cat text2.txt

## Redirect (only) `STDOUT` to a file

echo "Hello Terminal #3" > text3.txt

cat text3.txt

Warning

If you redirect STDOUT and STDERR to an existing file, the file will be overwritten.

## Overwrite the previous message in text1.txt

echo "Nothing in life is to be feared." > text1.txt

more text1.txt

You can print to different files and then combine (concatenate) these files into a single file.

## Print another message to a different file

echo "It is only to be understood." > text2.txt

## Merge the contents of the files into a new file

cat text1.txt text2.txt > text12.txt

cat text12.txt

Redirect Output (Append)

Instead of merging multiple output files, you can use the double greater-than operator (>>) to append new output to an existing file.

## Add (>>) a third line to the combined file

echo "Marie Curie" >> text12.txt

cat text12.txt

Redirecting Input from a File

The less-than operator (<) allows you to redirect input from a file, changing the direction of the data flow.

<command> file.txt # do something with the file

<command> < file.txt # feed the file to the command as input

These two commands look similar, but there is a subtle difference in how they handle the input.

## Count the number of lines in a text file using two different approaches:

wc -l text12.txt > count.txt # Version #1: filename is included in output

wc -l < text12.txt >> count.txt # Version #2: only the line count is output

## What was different?

cat count.txt

# Output:

# 3 text12.txt

# 3 (filename is missing)

## An alternative method to count the number of lines using a pipeline (more on this later)

cat text12.txt | wc -l

When we redirect STDIN, the data is sent "anonymously," meaning the command doesn't know the source of the data. This can be useful to avoid including unwanted extra information, such as the file name in the output. Here's an example:

## This approach includes unnecessary information (the file name) in the output

wc -l text12.txt > count.txt

echo "We have $(cat count.txt) lines."

## This approach is better because it only outputs the line count, without the file name

wc -l < text12.txt > count.txt

echo "We have $(cat count.txt) lines."

❖ Challenge #5: In the previous example, the file name was unnecessary. Can you think of a scenario where including the file name along with the line count would be useful?

Insight #5

Assume you have multiple files and you need to count the lines of each file.

wc -l text1.txt text2.txt text12.txt

# 1 text1.txt

# 1 text2.txt

# 3 text12.txt

# Alternative Solution

wc -l text*.txt

Piping

Piping allows you to send the output (STDOUT) of one command directly as input (STDIN) to another command. This is done using the vertical bar |. Piping is a powerful way to chain commands together in a sequence.

## Create a multi-line message

echo -e "Think Like a Proton\nStay Positive" > proton.txt

# ➜ \n creates a newline, dividing the string into two lines

cat proton.txt

## Show the first or last line of the file

cat proton.txt | head -n 1 # Display the first line

cat proton.txt | tail -n 1 # Display the last line

## Count the number of lines in another file

cat text12.txt | wc -l

Example: Log-Files

You can use redirection to create log files of your terminal sessions or any command output. Some applications offer a verbose (-v) option, which can provide additional details when testing. You can redirect this output to a file and check it for errors or warnings later.

## Create an empty log file and fill it with system information

rm LOG.txt; touch LOG.txt

echo "-------------------------------" >> LOG.txt

echo "Test Log File" >> LOG.txt

date +"Date: %d/%m/%y" >> LOG.txt

echo "User: ${USER}" >> LOG.txt

echo "-------------------------------" >> LOG.txt

env | grep "LOGNAME" -A 1 >> LOG.txt

env | grep "SHELL" >> LOG.txt

echo "-------------------------------" >> LOG.txt

echo "My working directory: ${PWD}" >> LOG.txt

echo -n "My grep version: " >> LOG.txt

grep --version >> LOG.txt

echo "-------------------------------" >> LOG.txt

clear; cat LOG.txt

❖ Challenge #6.1: Create a virtual dice and store the results of three rolls in a text file. To generate random numbers, you can use the special built-in variable $RANDOM, which produces a random integer each time it is referenced by an internal bash function.

## Generate a random number

echo ${RANDOM}

This command outputs a random number between 0 and 32767. To simulate a dice roll, we need to restrict this range to [1-6].

## Restrict the range to [0-6]

echo $(( RANDOM % 7))

## Adjust to get a range between [1-6]

echo $((1 + RANDOM % 6))

# or

echo $((RANDOM % 6 + 1))

Now, let's roll the dice three times and save the results in a file.

Insight #6.1

6.1A Step-by-Step

echo $((1 + RANDOM % 6)) > random_number_1.tmp

echo $((1 + RANDOM % 6)) > random_number_2.tmp

echo $((1 + RANDOM % 6)) > random_number_3.tmp

cat random_number_[123].tmp > random_numbers_S1.txt

rm -i *.tmp

cat random_numbers_S1.txt

6.1B Without temporary files

echo $((1 + RANDOM % 6)) > random_numbers_2.txt

echo $((1 + RANDOM % 6)) >> random_numbers_2.txt

echo $((1 + RANDOM % 6)) >> random_numbers_2.txt

cat random_numbers_2.txt

6.1C Using a FOR loop (for more advanced users)

for ((i=0; i<3; i++)); do

random_number=$((1 + RANDOM % 6))

echo "Random number ${i}: $random_number"

done > random_numbers.txt

cat random_numbers.txt

6.1D Another FOR loop solution

for i in {1..3}; do

echo $((RANDOM % 6 + 1))

done > random_numbers.txt

cat random_numbers.txt

❖ Challenge #6.2: For debugging or any scenario where reproducibility is important, you need to ensure that your random number sequences are consistent. Can you think of a way to make random numbers predictable?

Insight #6.2

Since ${RANDOM} generates pseudo-random numbers, the sequence is deterministic if you set the same seed. Assigning a value to ${RANDOM} sets the seed, ensuring the sequence is predictable.

RANDOM=123; echo $RANDOM

echo $RANDOM

RANDOM=123; echo $RANDOM

Seeding the Random Number Generator: In Bash, assigning a value to ${RANDOM} sets the seed for the generator, making the sequence reproducible.

RANDOM=123

for i in {1..5}; do

echo $((RANDOM % 6 + 1))

done > random_numbers_1.txt

If you change the seed value, the sequence will differ.

RANDOM=321

for i in {1..5}; do

echo $((RANDOM % 6 + 1))

done > random_numbers_2.txt

RANDOM=123

for i in {1..5}; do

echo $((RANDOM % 6 + 1))

done > random_numbers_3.txt

Now, compare the results:

diff --brief random_numbers_1.txt random_numbers_2.txt

diff --brief random_numbers_1.txt random_numbers_3.txt

By setting the seed at the beginning of your script, you ensure that the same sequence of random numbers is generated every time you run the code.

Copy, Rename and Remove

In Linux, managing files involves three basic tasks:

- Copying (

cp): Duplicate files or directories. - Renaming/Moving (

mv): Change file names or move them to new locations. - Removing (

rm): Delete files or directories permanently.

These simple commands are essential for efficiently handling files in the Linux environment.

More details, please!

The cp command lets you create a duplicate of a file or directory. This is useful when you want to make a backup or create multiple versions of a file.

The mv command is used to rename a file or move it to a different location. Unlike copying, this operation does not create a duplicate—it's more efficient when you simply want to change the file name or move it elsewhere.

The rm command is used to delete files or directories permanently. Once removed, files cannot be easily recovered, so it's important to use this command carefully.

## Copy a file - the original file remains unchanged

cp text12.txt Marie_Curie.txt

ls -l

cat text12.txt Marie_Curie.txt

# Compare the two files to check for differences

diff text12.txt Marie_Curie.txt

## Rename (move) a file - the original file is replaced with the new name

mv ZERO.txt logfile.txt

ls -l

## Remove file(s) - permanently deletes the specified files

rm -i text12.txt text.txt

ls -l

❖ Challenge #7.1: What is the difference between these two commands.

cp file.txt newfile.txt

cat file.txt > newfile.txt

Insight #7.1

- The first command creates an exact copy of

file.txt, regardless of the file type. This method is reliable and works for any file format.

cp file.pdf newfile.pdf # ✔︎

- The second command reads the content of

file.txtand writes it tonewfile.txt. While this works for text files, it's not ideal for other file types like PDFs.

cat file.pdf > newfile.pdf # Not recommended

Using cat to copy a PDF file might work, but it's not the best approach. cat is meant for reading and concatenating files, not for copying them.

For more control, especially with larger files or when you need specific parameters, you can use the dd command:

dd if=file.pdf of=newfile.pdf

cp vs. cat

Commands like cp and dd are optimized for copying files, making them faster and more reliable than cat for this purpose. They are designed to handle various errors and edge cases that might occur during file copying.

❖ Challenge #7.2: When would you choose mv instead of cp?

cp file.txt newfile.txt

mv file.txt newfile.txt

Insight #7.2

-

cpcreates a duplicate of the file, preserving the original. This is safer, especially if you're unsure whether the original will be needed again. However, it uses more disk space and can be slower for large files. -

mvsimply renames or relocates the file without making a copy. This is faster and more efficient because it doesn't duplicate the data. Usemvwhen you don't need to keep the original file or want to move it to a new location.

Comments

Code comments are essential for making your code understandable, maintainable, and well-documented. They clarify complex logic, making it easier for others (and your future self) to understand the purpose and functionality of different parts of the code. Comments also help structure your code by breaking it down into logical sections, enhancing readability. Additionally, they serve as in-line documentation, providing valuable context directly within the code, which is particularly useful in larger projects. In short, well-written comments improve the clarity, organization, and long-term usability of your code.

Here is a simple bash example. You don't need to understand every line, but note how comments (#) are used to explain and structure the code for improved readability.

## \\\\\|///// ##

## ASCII Art ##

## /////|\\\\\ ##

## Set up the working directory

# mkdir -p ${HOME}/EvoGen/BioInf/terminal # Create a directory

cd ${HOME}/EvoGen/BioInf/terminal # Navigate to the directory

## (a) Create an ASCII Ant

echo "My ASCII-Ant" > ant.txt # Create a file and write the title

echo " \(¨)/ " >> ant.txt # Add the head and front legs

echo " -( )- " >> ant.txt # Add the thorax and middle legs

echo " /(_)\ " >> ant.txt # Add the abdomen and hind legs

echo "" >> ant.txt # Add an empty line

## (b) Create an ASCII Bee

echo " <\ " > bee.txt # Create a file and write the first line

echo " (¨)(_)(()))=- " >> bee.txt # Add the second line

echo " \ |// /__ " >> bee.txt # Add the third line

echo " | )/ ) " >> bee.txt # Add the fourth line

echo " _ _ " >> bee.txt # Add the last line

echo "" >> bee.txt # Add an empty line

## (c) Display the insects

cat ant.txt # Display the ant

tac bee.txt # Display the bee in reverse order

# If 'tac' is unavailable, use:

tail -r bee.txt # Alternative command to reverse the bee

# My Comments:

# (!) 'tac' is not a standard command; 'tail -r' is a more universal alternative.

# (?) Can you flip the ant horizontally?

# (?) Is there a better way to generate ASCII art?

Wildcards

Wildcards in the Linux terminal are powerful tools that allow users to work with multiple files and directories efficiently by using patterns instead of typing out each name individually. The most common wildcards are * (matches any number of characters), ? (matches a single character), and [] (matches any one character within the brackets). These can be used in commands like ls, cp, rm, and others to select groups of files based on their names or extensions, making file management faster and more flexible. Wildcards are essential for automating tasks and handling large numbers of files with minimal effort.

## List all text files

ls *.txt # list all files with ending .txt

ls text?.txt # list all files starting with text, followed by one character, and ending with .txt

ls text[123].txt # list all files starting with text, followed by 1,2 or 3, and ending with .txt

# ➜ * any characters

# ➜ ? one charachter

# ➜ [123] a group - meaning 1, 2, or 3

## Remove multiple files

rm -i text1.txt text2.txt text3.txt

rm -i text[123].txt

❖ Challenge #8.1: Can you find a command line to delete the index (I1 or I2) samples but keep the forward (R1) and reverse (R2) reads?

Sample_GX0I1_R1.fq.gz

Sample_GX0I1_R2.fq.gz

Sample_GX0I1_I1.fq.gz

Sample_GX0I1_I2.fq.gz

Sample_GX0I2_R1.fq.gz

Sample_GX0I2_R2.fq.gz

Sample_GX0I2_I1.fq.gz

Sample_GX0I2_I2.fq.gz

Insight 8.1

There are usually more than just one possible solution. Some might be better (e.g. faster, more secure) than others but it is paramount you understand what you do.

## Insight 8.1a

rm -i Sample_GX0I1_I1.fq.gz Sample_GX0I1_I2.fq.gz Sample_GX0I2_I1.fq.gz Sample_GX0I2_I2.fq.gz

# ➜ Safe and it works but imagine you have a few hundred files.

## Insight 8.1b

rm -i Sample_GX0I?_I?.fq.gz

# ➜ Also safe and would work just fine as long as all samples follow the same name structure.

## Insight 8.1c

rm -i *_I1.*

# ➜ Short and precise but can be dangerous.

## Tip: You might test your wildcards first?

ls -ah *_I1.*

❖ Challenge #8.2: What is the problem with the following command? Can you correct it?

# cat sequence*.fa >> sequence_all.fa # ✖︎✖︎✖︎ Do not use!

Insight #8.2

The command will never finish until your hard drive is filled. The wildcard also includes the output file and this would create a "never ending" circle. Better/correct solutions would include:

cat sequence*.fa >> all_sequence.fa

cat sequence*.fa >> different_path/sequence_all.fa

Terminal History

You may be familiar with the history of your Internet browser. The Terminal also has a history. This is great because with the command history we can not only search the past, but it also means that we do not have to retype previous commands. Use the up and down arrows to move through your history. You can also access it:

history

With no options (default), you will see a list of previous commands with line numbers. Lines prefixed with a * have been modified. An argument of n lists only the last n lines.

-c clear history

-d offset Delete the history entry at position offset.

-d start-end Delete the history entries between positions start and end

-a Append the new history lines to the history file.

-n Append the history lines not already read from the history file to the current history list.

-r Read the history file and append its contents to the history list.

-w Write out the current history list to the history file.

Syntax examples:

history -5 # show last five entries

histroy 5-10 # show lines 5 to 10

history -d 2-3 # delete liens 2 and three

history -c # clear entire history

history [-anrw] [filename]

❖ Challenge #9.1: Why would you need a history of your commands?

Insight #9.1

There are many good reason. Let me list a two, for me obvious ones. I am happy to learn new ones if you like to share.

- You might have noticed that with the

keys you can navigate the history. Saves a lot of time typing the same or a similar command. - You can also used the history for troubleshooting or to keep a log file of your session.

❖ Challenge #9.2: Preserve your history!

- Create a text file with a title and your username.

- Add the last 20 command lines you used to the file.

- Add a date to the bottom of the file.

Insight #9.2

echo "=== Safe My History ===" > MyHistory.txt

echo "${USER}" >> MyHistory.txt

echo "-----------------------" >> MyHistory.txt

history | tail -n 20 >> MyHistory.txt

echo "-----------------------" >> MyHistory.txt

date "+%A, %d.%B %Y" >> MyHistory.txt

clear; cat MyHistory.txt

Math Arithmetic

I am not saying it is perfect, but it is possible. Here some basic mathematical operations.

The legacy way to do math calculations with integer is using expr.

expr 7 - 2

expr 7 + 4

expr 7 \* 3

expr 9 \/ 3 # floating-point arithmetic does not work

For floating-point arithmetic you can use bc

echo "7-2" | bc

echo "7-4" | bc

echo "7*2" | bc

echo "7/2" | bc

echo "scale=2; 7/2" | bc

Working With Sequence Files

It is important to play around with a simple example to understand the idea behind a terminal command. However, once we have a solid foundation, we should move on to more practical problems and examples.

# Download a sequence fasta file

pwd # make sure this is the right place for the download

curl -O https://www.gdc-docs.ethz.ch/GeneticDiversityAnalysis/GDA/data/RDP_16S_Archaea_Subset.fasta

# A closer look at the file

ls -lh RDP_16S_Archaea_Subset.fasta

# Count the number of lines in the file

wc -l RDP_16S_Archaea_Subset.fasta

# Have a look at the first 15 lines

head -n 15 RDP_16S_Archaea_Subset.fasta

# Have a look at the last 15 lines

tail -n 15 RDP_16S_Archaea_Subset.fasta

# Scroll through the fasta file

# Be careful with bigger file.

less RDP_16S_Archaea_Subset.fasta # remeber to exit with [q]

# Count the number of sequences

grep ">" RDP_16S_Archaea_Subset.fasta | wc -l

grep ">" -c RDP_16S_Archaea_Subset.fasta

# Find a specific sequence motif and highlight it

grep "cggattagatacccg" --color RDP_16S_Archaea_Subset.fasta

# How often does a sequence motif occur

grep "cgggaggc" -c RDP_16S_Archaea_Subset.fasta

# Find similar sequence motifs (step-by-step)

grep "cgggaggc" -c RDP_16S_Archaea_Subset.fasta

grep "cgggtggc" -c RDP_16S_Archaea_Subset.fasta

grep "cgggcggc" -c RDP_16S_Archaea_Subset.fasta

grep "cggggggc" -c RDP_16S_Archaea_Subset.fasta

# A faster alternative

grep "cggg[atcg]ggc" -c RDP_16S_Archaea_Subset.fasta

# Find a long serie of e.g. Gs

grep "g" -c RDP_16S_Archaea_Subset.fasta

grep "gg" -c RDP_16S_Archaea_Subset.fasta

grep "gggg" -c RDP_16S_Archaea_Subset.fasta

grep -E -c "g{6}" RDP_16S_Archaea_Subset.fasta

❖ Challenge #10: The search for sequence motifs is very limited. Can you see why this is? How could you overcome the limitations?

Insight #10

There are at least two things to keep in mind that can have a negative impact on the search.

1 - The search is case sensitive. So a search must contain both (upper and lower case) or the sequences must be formatted in the same way.

tr '[:upper:]' '[:lower:]' < RDP_16S_Archaea_Subset.fasta > RDP_16S_Archaea_Subset_AllLow.fa

2 - The sequence in a fasta file may extend over several lines. Therefore, wrapped sequence motifs will not be recognised. You would have to reformat the fasta file to make the sequence line up.

# Wrapped Sequences

>seq_1

tgctgcaccc

cccgcactgc

>seq_2

tgctgcaacc

cccgcattgc

# Lined Up Sequence

>seq_1

tgctgcaccccccgcactgc

>seq_2

tgctgcaacccccgcattgc

We will see how to solve this when we get to the Remote Terminal chapter, which deals with tampering with Fasta files in more details.

Helpful Resources

⬇︎ Linux Cheatsheet ⬇︎ Linux Pocket Guide

If you are new to the command line you might find these links useful:

In case you prefer a movie ...

- Beginner`s Guide to the Bash Terminal (YouTube Movie)

- How to use the Command Line | Terminal Basics for Beginners (YouTube Movie)

This is only a small and limited selection. There is more, much more.