Learning Objectives

◇ Gain a clear understanding of what Decision Trees and Random Forest is and how it works.

◇ Learn about the ensemble nature of Random Forest, where it combines multiple decision trees to improve predictive performance.

◇ Understand the role of randomness in Random Forest, including random sampling (bootstrapping) and random feature selection.

Machine learning (ML) is a field of artificial intelligence (AI) that focuses on creating algorithms that allow computers to learn from data and make predictions based on it. A decision tree is a basic machine learning model that makes decisions by dividing data into branches based on feature values, leading to a final decision or prediction. Random forests extend decision trees by creating a collection (or "forest") of many decision trees. Each tree in the forest makes its own prediction, and the final output is determined by aggregating the results (e.g., majority vote or average). This method increases accuracy and reduces the risk of overfitting compared to a single decision tree.

Decision Tree

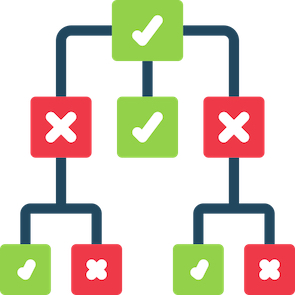

A decision tree is a tree-like structure consisting of nodes and branches. It's used to make decisions or predictions by recursively partitioning data into subsets based on the values of input features.

Decision trees are typically constructed using recursive binary splitting, where the dataset is split into two subsets at each internal node. This process continues until a stopping criterion is met, such as a maximum tree depth, a minimum number of samples per leaf, or a purity threshold for classification.

To make a prediction or classification, you start at the root node and follow the path down the tree based on the conditions. You will eventually reach a leaf node that provides the prediction or class label.

At each internal node, a decision tree algorithm selects the feature and threshold that best divides the data into more homogeneous subsets. The goal is to maximise the information gain (for classification) or minimise the mean squared error (for regression).

Decision trees can be improved using ensemble methods such as Random Forests and Gradient Boosting, which combine multiple trees to improve prediction accuracy and reduce overfitting.

Random Forest

Random Forests is a widely-used machine learning algorithm that primarily deals with classification and regression tasks. During the prediction phase, the most common prediction across all the trees is then used as the final prediction.

The fundamental building blocks of Random Forests are decision trees, which are uncomplicated models that divide the data into subsets based on the features (attributes) in order to make predictions through a recursive process.

The "random" aspect of Random Forests is derived from two primary sources of

a) Each decision tree within the forest is trained on a randomly selected portion of the training data, known as "bootstrapping" or "bagging." To reduce overfitting, decision trees can benefit from diverse subsets of data for each tree.

b) achieves this by only considering a random subset of features at each decision node. This avoids any one feature dominating the decision-making process and results in more varied trees.

After training a range of decision trees on various data subsets and with diverse feature subsets, Random Forests amalgamate their forecasts via a majority vote (for classification) or averaging (for regression). This ensemble technique diminishes the variability and frequently delivers more precise predictions than individual trees.

Random Forests are renowned for their robustness to noisy data, outliers, and overfitting. Random Forests have a lower tendency to memorise the training data, making them more adept at generalising to unseen data.

In essence, Random Forests comprise a collection of decision trees that utilize randomness in data sampling and feature selection to better forecast accuracy and resilience. Consequently, they represent a potent and adaptable machine learning algorithm with various potential applications.

Iris Example

## Your current working directoy

getwd()

## Create a new directory and go there

dir.create("RandomForests"); setwd("RandomForests")

## Download the R script

rf1.url <- "https://www.gdc-docs.ethz.ch/UniBS/EvolutionaryGenetics/BioInf/script/DT_RM_Introduction.zip"

utils::download.file(rf1.url, destfile = "DT_RM_Introduction.zip")

## Unzip File

unzip("DT_RM_Introduction.zip")

## Cleanup

file.remove("DT_RM_Introduction.zip")

## Open Rmd File

file.edit("DT_RM_Introduction.Rmd")

## View Report

rstudioapi::viewer("DT_RM_Introduction.html")