Learning Objectives

Main

◇ Understand the basic principles and differences between massive-parallel sequencing (MPS) platforms.

◇ Understand sample indexing and sequence (read) types.

Minor

◇ Understand the importance of a read data archive and be able to access such data.

◇ Understand the advantages, limitations and applications of MPS in e.g. genomics, transcriptomics or metabarcoding.

Lecture Notes

⬇︎ MPS (NGS)

Massively Parallel Sequencing

Massive parallel sequencing or massively parallel sequencing is any of several high-throughput approaches to DNA sequencing using the concept of massively parallel processing.

MPS ~ NGS

I use the term massively parallel sequencing (MPS) because it is a more general term and it also includes newer sequencing technologies. Next-generation sequencing (NGS) refers to PCR-based sequencing technologies (e.g. Roche 454, Illumina), while single-molecule sequencing (e.g. PacBio or ONT) is often referred to as next-next or third-generation sequencing. I keep it simple and use MPS.

Biological and medical science has been (and continues to be) revolutionised by rapid advances in modern DNA sequencing technologies.

"The major factors, such as increasing applications of NGS, speed, cost, and accuracy, efficient replacement for traditional technologies, and drug discovery applications demanding NGS technology are expected to drive the growth of the overall market." (ResearchAndMarkets.com)

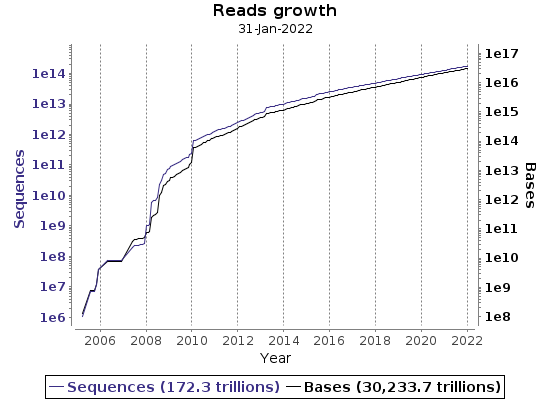

The ease of data generation has shifted the main research focus from loci to genomes, increased sample numbers, required data management strategies, and changed data analysis. As a result, nucleotide sequence archives (ENA or NCBI) are growing daily.

ENA regularly collects various statistics on data growth.

Sequencing Technology Explained

(First Generation) Sequencing

Next (Second) Generation Sequencing

- 454 Sequencing - Explained

- Ion Torrent

- Illumina Sequencing Technology

- Element Biosciences - Aviti System / Avidity Base Chemistry Infographic

- Singular Genomics

Third (Single-Molecule) Generation Sequencing

(Single-Molecule) Genome Mapping

Yes we can (now)!

High-throughput sequencing (HTS) is a powerful tool, but generating large amounts of sequencing data without a clear purpose or understanding can lead to many challenges. Sequencing efforts guided by clear hypotheses and research objectives will ensure that the data generated is relevant and makes a meaningful contribution to scientific understanding. Different sequencing technologies have different strengths and weaknesses. It's important to choose the right technology based on the specific needs of the study, such as read length, accuracy, bias and coverage. Understanding these limitations is essential for accurate data interpretation and avoiding misleading conclusions.

Comparison

There are many detailed reports comparing different MPS technologies. The problem is that they are out of date by the time they are published. Sequencing technology is developing rapidly and it is difficult to keep up with the latest developments. The following is a rough and personal guide.

Pros & Cons

◇ Illumina: High accuracy and throughput, but relatively short read lengths.

◇ BGI: Competitive price and high throughput, but may have different performance characteristics to other platforms.

◇ Element Biosciences: New entrant with promising economics and accuracy, but still establishing its market presence.

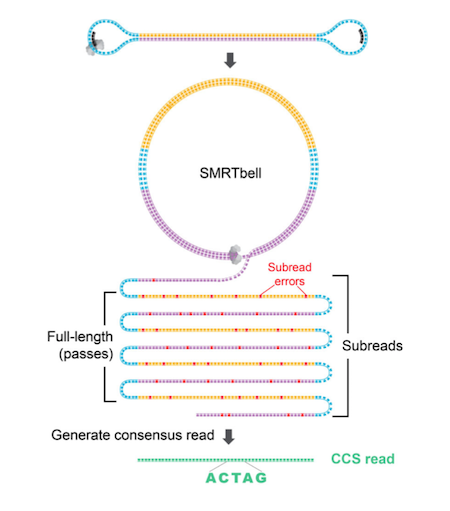

◇ PacBio: Long read lengths and high consensus accuracy, but higher cost and raw read error rates.

◇ Oxford Nanopore: Ultra-long read lengths and real-time sequencing, but higher error rates than other platforms.

The table does not include all technologies, only those most commonly used by the GDC. The table also ignores solutions for specific applications. We at the GDC believe that the question and all available resources (not just money) should determine the sequencing technology. There is no point in aiming for long reads if you do not have enough and/or highly fragmented starting DNA.

NGS Sequence File Format (Raw Data)

Illumina sequence reads are usually provided as de-multiplexed fastq.gz files. PacBio sequence reads are provided as either fastq, circular consensus sequences (ccs) fastq or BAM files. CCS files can be considered as error corrected sequence reads. Shorter sequence fragments are read several time (circled) during a PacBio run and the accuracy increases with each pass. Oxford Nanopore (ONT) raw data are provided as FAST5 (older) POD5 (newer) files and must be base-called using e.g. Dorado to obtain fastq files.

FASTQ has emerged as a common file format for sharing sequencing read data, combining both the sequence and an associated per-base quality score. You should have the appropriate FASTQ file for each sample (Illumina single-end (SR), PacBio and Nanopore data). For an Illumina paired-end (PE) run, you should have two FASTQ files (i.e. R1 and R2) per sample.

Data Archives

It is important that you share your data and perhaps some other resources with the scientific community. Submit your raw sequencing data to one of three data repositories. The European Nucleotide Archive (ENA), the GenBank / Sequence Read Archive (SRA) at NCBI and the DNA DataBank of Japan (DDBJ) are all part of the International Nucleotide Sequence Database Collaboration and exchange data on a regular basis.

- International Nucleotide Sequence Database Collaboration

- European Nucleotide Archive (ENA)

- ENA: Guidelines and Tutorials

- NCBI: Sequence Read Archive (SRA)

There are other not-for-profit resource repositories which promote the publication, curation and preservation of all types of data.

Choosing the best is choosing the right!

It is important to note that choosing the best not-for-profit repository depends on several factors, including the type of data, the subject area, and the specific requirements for storing and sharing the data. It is recommended that you review the features and policies of each repository to select the one that best suits your needs.

Challenges

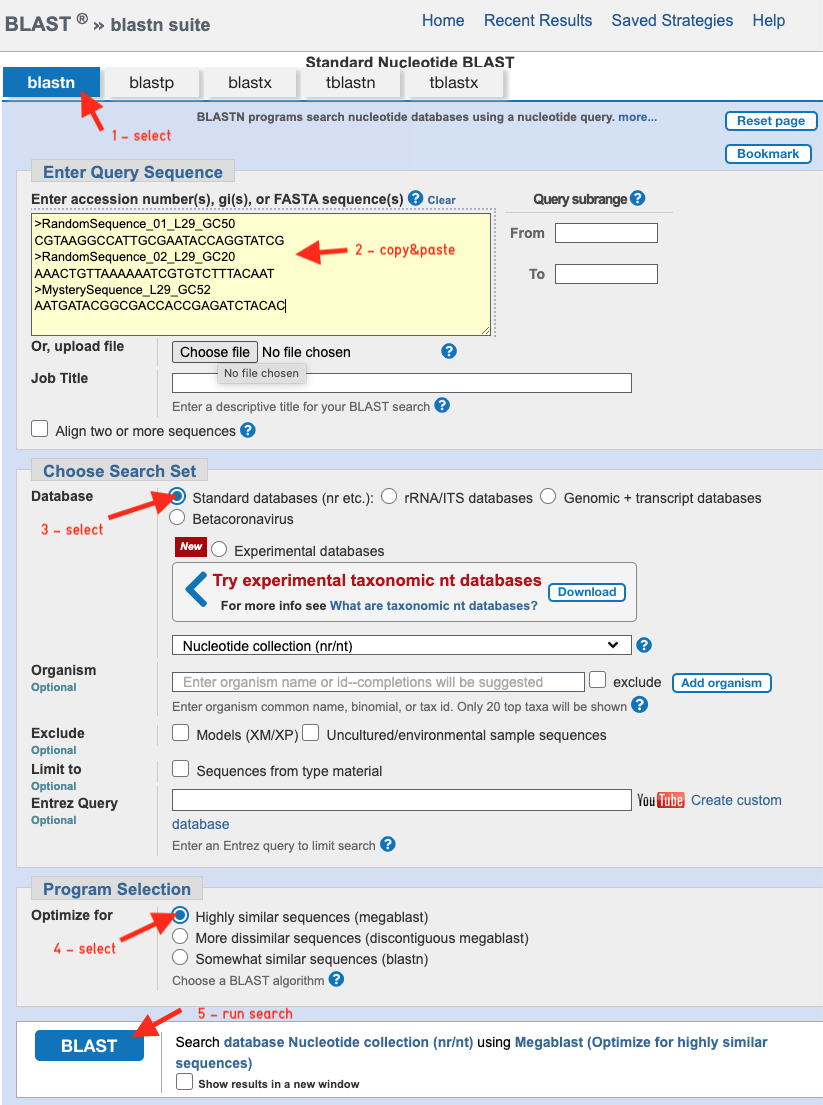

For the following challenges, I assume you are familiar with (NCBI) Blast searches. There are NCBI blast tutorials and NCBI webinars available to get started or to learn more.

First, we generate two 29nt long random DNA sequences with a GC content of 50% and 20%. You can either use the Random Sequence Generator provided by molbiotools, write your own (see inspiration below), use the example below, or simply do it by hand.

Ideas for a Random-Sequence-Generator(s)

A simple bash solution:

rm RandomSequence.fa; touch RandomSequence.fa

echo ">RandomSequence (29nt)" >> RandomSequence.fa

cat /dev/urandom | tr -dc 'AACTTG' | fold -w 29 | head -n 1 >> RandomSequence.fa

cat RandomSequence.fa

## Function to create random nucleotide sequences

generate_random_sequence <- function(length=29, frequencies=rep(0.25,4)) {

nucleotides <- c("A", "C", "G", "T")

sequence <- sample(nucleotides, length, replace = TRUE, prob = frequencies)

return(paste(sequence, collapse = ""))

}

## Function to create a random fasta sequence

generate_fasta_sequence <- function(header="RandomSequence", length=29, frequencies=rep(0.25,4)) {

sequence <- generate_random_sequence(length, frequencies)

fasta_sequence <- paste0(">", header, "\n", sequence)

return(fasta_sequence)

}

## Function to create multiple random fasta sequences

generate_random_fasta_sequences <- function(num_sequences=1, sequence_length=29, frequencies=rep(0.25,4)) {

sequences <- vector(mode = "character", length = num_sequences)

# Loop to create multiple sequences

for (i in 1:num_sequences) {

header <- paste0("Sequence_", i)

sequence <- generate_fasta_sequence(header, sequence_length, frequencies)

sequences[i] <- sequence

}

return(sequences)

}

# Usage:

num_sequences <- 1

sequence_length <- 29

nucleotide_frequencies <- c(0.4, 0.1, 0.1, 0.4) # Freq: A, C, G, T

random_sequences <- generate_random_fasta_sequences(num_sequences, sequence_length, nucleotide_frequencies)

# Print sequence(s):

for (i in 1:num_sequences) {

cat(random_sequences[i], "\n")

}

## ---------------------------------------------------

## R Script

## Create a Random Nucleotide Sequences

## Version: 08.02.2019

## ---------------------------------------------------

# Requirements

# library(seqinr)

## User Input

SeqN <- 3 # Number of sequences

SeqL <- 29 # Sequence length

NucF <- c(0.25, 0.25, 0.25, 0.25) # Nucleotide Frequency: A T C G

## Settings

set.seed(060623)

DNA <- c ("A", "T", "C", "G")

GCcontent <- sum(NucF[3:4])*100

## Delete (Previous) Output

FastaOutput <- "RandomSequence.fasta"

if (file.exists(FastaOutput)) {

unlink(FastaOutput)

print("Previous output is deleted.")

} else{

print("File does not exists.")

}

## Create Random Fasta File

for (i in 1:SeqN) {

FastaHeader <- paste("RandomSequence_", i," L:",SeqL, "nt", " GC:" , GCcontent, "%", sep="")

DNASeq <- paste(sample(DNA,

SeqL,

replace = TRUE,

prob = NucF),

collapse = "")

seqinr::write.fasta(DNASeq,

FastaHeader,

paste("RandomSequence", ".fasta", sep=""),

open = "a",

nbchar = 60,

as.string = TRUE)

}

Possible sequence example:

>RandomSequence_01_L29_GC50

CGTAAGGCCATTGCGAATACCAGGTATCG

>RandomSequence_02_L29_GC20

AAACTGTTAAAAAATCGTGTCTTTACAAT

❖ Challenge #1: What is the text-based format representing the two nucleotide sequences above called and what would be an appropriate extension for the file?

Suggestion #1

The file format is called fasta (see [Biocomputing] for more help) and the file extension (suffix) should be "fa" (e.g. seq.fa) or fasta (e.g. seq.fasta). If the sequences are aligned, the file extension could be afa (e.g. alignment.afa). Possible file name: RandomSequences.fa

❖ Challenge #2: How many ways are there to build a 29nt long sequence? In other words, what is the probability that we will make exactly the same sequences twice (with the same CG content)? Also consider how the GC content affects this probability.

Suggestion #2

We have 4 possibilities (nucleotides) for each of the 29 sites, resulting in 4^29 = 2.88e17 possible nucleotide sequences. The likelihood is pretty slim!

Add the following "unknown" sequence to the previous two randomly generated sequences.

>MysterySequence_L29_GC??

AATGATACGGCGACCACCGAGATCTACAC

❖ Challenge #3: What is the GC content of the unknown mystery sequences?

Suggestion #3

It is ~52% (15/29) and similar to random sequence #1. You could use `bash` or `R` to calculate the GC-content. Alternatively, you could try `comseq` from the EMBOSS package with word size 1 to calculate nucleotide frequencies.

A first simple solution in `bash` to explore sultions:

# Total number of nucleotides (length)

echo -n "AATGATACGGCGACCACCGAGATCTACAC" | wc -c

# ... an alterantive

string="AATGATACGGCGACCACCGAGATCTACAC"

echo "length is ${#string}"

# Number of Cs and Gs

echo -n "AATGATACGGCGACCACCGAGATCTACAC" | sed -E 's/A|T//g' | wc -c

# ... an alterantive

echo "number of Cs: $(echo $string | grep -o 'C' | wc -l)"

echo "number of Gs: $(echo $string | grep -o 'G' | wc -l)"

# GC-Content

echo "scale=2; 15/29*100" | bc

string="AATGATACGGCGACCACCGAGATCTACAC"

# Convert the string to uppercase to account for both uppercase and lowercase characters

string=$(echo "$string" | tr '[:lower:]' '[:upper:]')

# Count the total number of characters in the string

string_length=${#string}

# Count the occurrences of 'G' and 'C' in the string

count_GC=$(echo "$string" | grep -o '[CG]' | wc -l)

# Calculate the percentage of 'G'

percentage_GC=$((count_GC * 100 / string_length))

# Print the results

echo "GC-Content: $percentage_GC%"

GCcontent <- function(sequence){

# Function to calcualte GC content

# Usage: GCcontent("AACCTTGG")

# "CG content is 50 %"

sequence_caps <- toupper(sequence)

split_sequence <- strsplit(sequence_caps, "")[[1]]

sequence_length <- length(split_sequence)

gc_content <- sum(split_sequence == "C" | split_sequence == "G")

return(paste("GC content is", round((gc_content/sequence_length)*100, 1), "%"))

}

Next, we search the largest available collection of nucleotide sequences to look for similar known sequences. To do this, we compare (blast) all three sequences against the NCBI nt BLAST-Database.

- Choose "Nucleotide BLAST"

- Copy and paste the three sequences into the "Enter Query Sequence" field.

- Choose database "Standard databases (nr etc.)"

- Select "megablast"

- Run BLAST

❖ Challenge #4a: What are your expectations?

My expectations are ...

→ Seq1: It is a well balanced (GC content 0.5) random sequence and therfore we would not expect a perfect hit. The sequence is, however, short and the database is rather large and one-hit wonders cannot be fully excluded.

→ Seq2: There is a good chance that we get some good hits because of the lower complexity. Sequence complexity is an important factor in alinments.

→ Seq3: Not sure. It seems that random blast hits depend on sequence length and complexity (among other factors). If it is a radom sequences, I would also expect no prefect hits similar to random sequence #1. If there are multiple hits the sequences might not be random at all. The Blast result could give hints.

❖ Challenge #4b: Interpret your Blast results carefully and compare it with your expectations. Do you have any idea what the unknown sequence #3 could be?

Blast Results?

→ Seq1: As expected, there is no perfect hit with 100% coverage and 100% identity. There are some possible hits with shorter sequences or with mis-matches.

→ Seq2: The result is similar to the previous sequence. Why?

→ Seq3: Compare to sequence #1 and my expectations, the blast result shows numerous perfect hits (100% similarity and 100% coverage) with a wide range of possible taxonomic classifications. According to the blast results the unknown sequence could be associated to carp, sea anemone, pineapple, fungi, bacterium or even SARS-CoV-2.

Do you have a good explanation for the puzzling BLAST results of Sequence #3? Do you agree that it is not a fluke but something real? Could it be an ultra-conserved element (UCE) or is it an artefact? Perhaps the internet can help? The sequence is short enough to be used in a Google search, for example.

What could be the origin of the "mystery" sequence?

It is not a UCE, but more likely the result of careless MPS data preparation. The sequence is a perfect match for the oligonucleotides used in Illumina (Nextera) library preparation. It is part of an adaptor sequence that you add to your target DNA fragments to sequence them. Quality reports should indicate adaptor contamination and there are many (freely available) tools (e.g. fastp, Cutadapt or Trimmomatic) to remove such artificially introduced sequences prior to assembly.

Take a look at the Illumina adaptor sequences (link below) and blast a few more. You will see that the problem of adapter contamination is widespread.

Currently, all sequencing platforms use adapters, including single-molecule-based platforms such as PacBio or ONT. There are a variety of applications that can be used to remove adaptors and not all of them require a sequencing template. Some sequencing vendors offer adapter removal, but you will still need to verify this.

❖ Challenge #5 Here is another 29bp long piece of DNA. Any idea what it might be?

>AnotherMysterySequence_L29_GC41

ACCTGTACTTCGTTCAGTTACGTATTGCT

Another widely used adaptor?

We start our search with Google. Unlike the Illumina adaptor, there are no clues as to what it might be.

Let's try ChatGPT.

=> "The sequence "ACCTGTACTTCGTTCAGTTACGTATTGCT" is a DNA sequence."

How true! You can't argue with that but can we go a little deeper?

We are now trying an NCBI Blast search, perhaps this will shed some light on the matter.

=> NCBI BLAST: hits with rodents, primate, nematodes, moths, diatoms, bacteria, viruses, ...

You might have noticed that the local alignments covers position 4 to 29!

Back to Google but this time we use a shoter fragment: TGTACTTCGTTCAGTTACGTATTGCT

=> We get a search hit for a page from FreePatentsOnline.com.

More information on the ONT Adaptor: (https://community.nanoporetech.com/technical_documents/chemistry-technical-document/v/chtd_500_v1_revaq_07jul2016/adapter-sequences)

Know what you don't know.

I hope that these two examples of adapter problems teach us a valuable lesson. Just because we can all do some fancy genome sequencing and use assembler programs, we should still understand what we are doing. It is still important to understand all the details, including sample preparation and the sequencing technology used, in order to properly prepare and analyse your sequencing data. The more you know, the better you will understand your data and possible artefacts. New technology is still only as good as you are!